Beyond Glue Code: How I Build GenAI Agents & Data Pipelines at Speed with Palantir

Published on 21 Jan 2026

Beyond Glue Code: How I Build GenAI Agents & Data Pipelines at Speed with Palantir

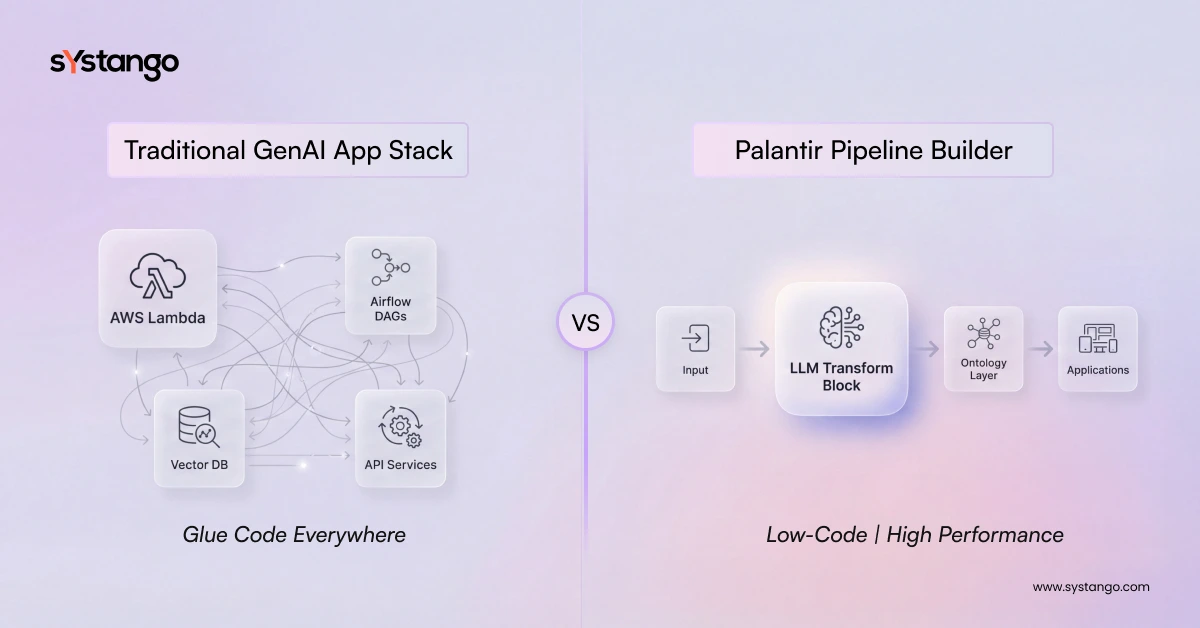

In the traditional world of data engineering and application development, we spend an inordinate amount of time on "plumbing." We stitch together AWS Lambda functions, wrestle with Airflow DAGs to manage dependencies, manage vector databases for RAG architectures, and write endless boilerplate code just to get data from Point A to Point B. We become so bogged down in maintaining the infrastructure; monitoring up-time, debugging API rate limits, and managing secrets—that the actual business logic often becomes a secondary concern.

I recently spent time deep-diving into Palantir’s ecosystem; specifically through their AI Engineer learning tracks, and the shift in perspective is jarring. It’s the difference between building a car from parts in your garage and driving a high-performance vehicle off the lot.

This blog explores why I’m moving my GenAI app development, agentic workflows, and data pipelines to Palantir, focusing on the speed of Pipeline Builder, the power of the Ontology, and the critical importance of Security.

1. The Engine Room: Palantir Data Foundry & Pipeline Builder

The core promise of Palantir Foundry is removing the friction between "raw data" and "ready-to-use asset."

Seamless Integration & Real-Time Processing Foundry acts as a central operating system for your data. It connects seamlessly to virtually any external source; S3 buckets, SQL databases, REST APIs, or on-prem legacy systems, allowing you to ingest data instantly. The platform handles the complexity of connection pooling and schema inference, meaning you can stop writing custom connectors and start working with the data immediately.

But the real magic happens in Pipeline Builder. Unlike writing PySpark scripts from scratch, where you have to manually optimize joins and manage memory partitions, Pipeline Builder offers a visual, low-code interface that compiles down to highly optimized Spark code. I recently built a pipeline that parses PDFs using LLMs directly within the transform logic. Instead of setting up a separate LangChain service to OCR and chunk documents; which introduces latency and another point of failure; I dropped an LLM transform directly into my pipeline. In minutes, I was extracting structured fields like "Invoice Date," "Total Amount," and "Vendor Name" from unstructured text blobs.

Beyond Basic ETL: Streaming & UDFs For use cases where "batch" isn't fast enough, Pipeline Builder supports Streaming pipelines. We are talking about processing data in under 15 seconds on average. This is critical for GenAI agents that need to react to live operational data. Imagine an agent designed to assist customer support; it needs to know about the transaction that failed five seconds ago, not the one that appears in tomorrow's daily report.

While the pre-built transforms cover 90% of needs, the platform doesn't lock you in. You can write User-Defined Functions (UDFs) in Python or Java for custom logic. However, a key learning from the Deep Dive tracks is to use UDFs judiciously. Because built-in transforms are optimized for the underlying compute engine, they execute faster and cheaper. The strategy is to modularize your code just like software: stick to built-ins for heavy lifting, and reserve UDFs for unique, domain-specific logic that cannot be expressed otherwise.

Architecting for Speed vs. Extensibility A major takeaway from my recent "speedrun" was the strategy around pipeline segmentation. It changes how you view data lineage.

-

Optimizing for Latency: If you need speed, you build a single, streamlined pipeline from Raw -> Ontology. This reduces the overhead of materializing intermediate datasets, ensuring data travels from source to user interface as quickly as possible.

-

Optimizing for Extensibility: For complex enterprise systems, we break pipelines into "Cleaning," "Joining," and "Ontology" segments. This modular approach allows us to reuse cleaned datasets across multiple projects. If I clean the "Customer Master" table once, ten different AI agents can consume it without re-processing the raw data, ensuring consistency and saving compute resources.

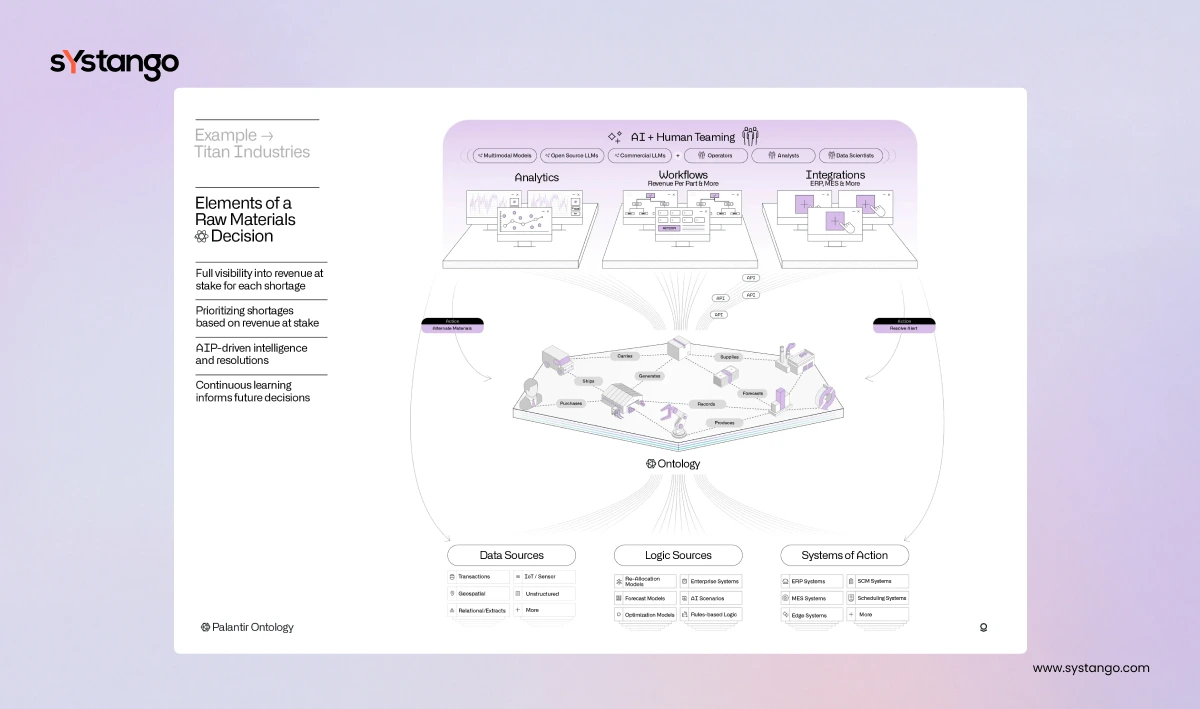

The diagram below shows how Palantir’s Ontology connects data sources, logic, and operational systems to enable AI-driven decision-making.

2. The Semantic Layer: Why The Ontology Matters

If Pipeline Builder is the engine, the Ontology is the steering wheel.

In traditional stacks, "data" is just rows and columns in a database. If a non-technical stakeholder wants to ask, "How many factories are at risk?", they need a SQL analyst to write a query joining three different tables.

Palantir’s Ontology abstracts this away. It maps your technical data (tables, streams, logs) to real-world concepts (e.g., "Factory," "Machine," "Alert," "Employee"). It is a semantic layer that hides the data engineering mess from the operators.

-

Power for Builders: You define the object once, and Foundry automatically generates the APIs and write-back capabilities. You don't need to write CRUD endpoints for every new entity; the platform handles the state management, allowing you to focus on the application logic.

-

Power for AI: When you point an LLM or Agent at the Ontology, it doesn't need to "guess" the database schema or hallucinate relationships. It understands the business concepts inherently. The LLM knows that a "Machine" has "Alerts" because that relationship is codified in the Ontology, dramatically improving the accuracy of Retrieval Augmented Generation (RAG).

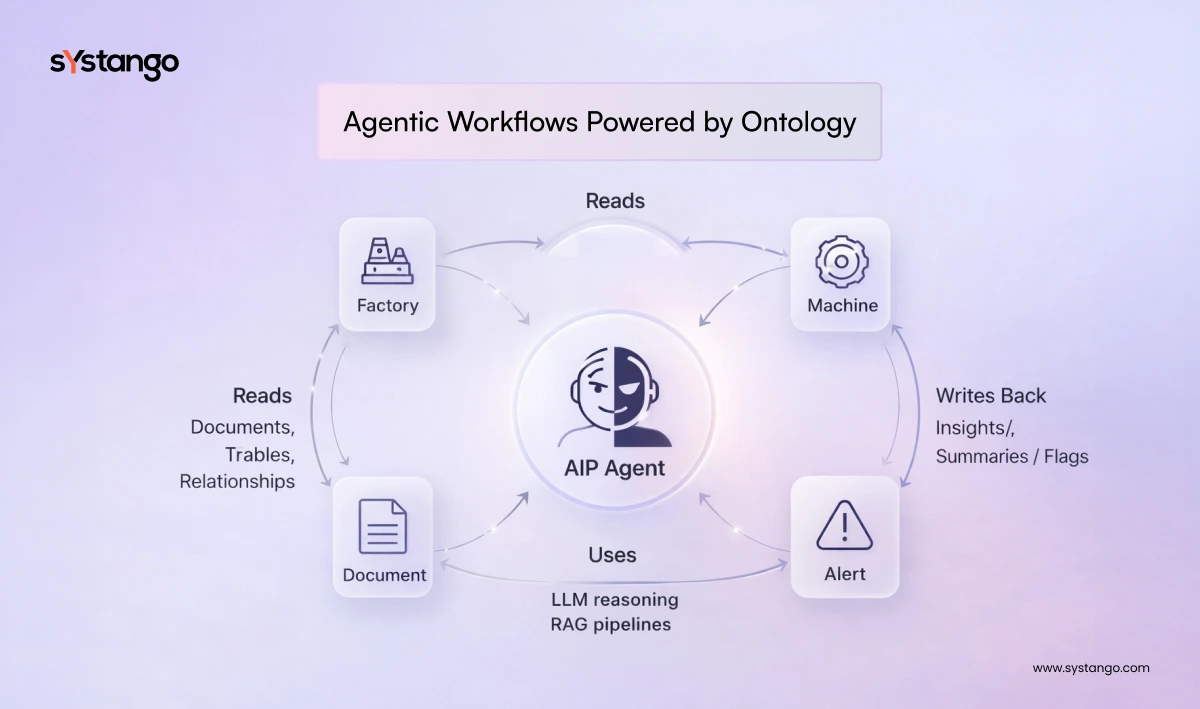

3. GenAI Agents & The Knowledge Graph

This is where the platform truly shines over a home-grown tech stack.

AIP Logic & Agents Building agents in raw code is fragile; managing context windows and prompt chains manually is error-prone. In Palantir’s AIP Logic, you can visually chain LLM calls, binding them to your Ontology. I recently configured an AIP Agent to outsource a literature search. Instead of manually querying a vector DB, the Agent uses the semantic layer to "read" through thousands of PDF attachments linked to our internal research objects. It can iterate through search results, synthesize the findings, and, crucially, write the summary back into the Ontology as a new property, making that insight permanent and accessible to others.

Palantir Vertex: The Knowledge Graph Visualizing data is usually limited to bar charts and static dashboards. Vertex (Palantir’s graph exploration tool) allows you to visualize the connectivity of your organization. It turns your data into a navigable map.

-

Discovering Relationships: You can see how a "Supplier" connects to a "Part," which connects to a "Delay," which finally connects to a "Customer Order." This visual lineage reveals second- and third-order effects that are invisible in standard tabular reports.

-

"What-If" Scenarios: Vertex allows for simulation. You can model "If this supplier goes offline, what downstream orders are affected?" and see the impact ripple through the graph. This creates a "Digital Twin" of your operations, allowing decision-makers to test hypotheses in a safe environment before committing resources in the real world.

4. Uncompromising Security & Privacy

As developers, we often gloss over security until the compliance team knocks on the door. With GenAI, however, security is the primary blocker to adoption. The risk of data leakage or unauthorized access to sensitive inferences is too high to ignore.

Palantir is famous for its work with defense and intelligence agencies, but what’s impressive is that individual developers get the same security architecture as the Pentagon.

Strict Data Isolation Foundry uses Organizations and Spaces to enforce strict silos. Even if you are a single dev processing public data, your environment is sandboxed with the same rigor as a classified project. You can apply Granular Permissions down to the specific row or column. If a user doesn't have permission to see PII (Personally Identifiable Information), that data simply doesn't exist for them, not in the table, not in the search, and not in the LLM's context.

The "No Training" Guarantee The biggest fear for any company is their proprietary data leaking into a public model. Palantir’s privacy policy and architecture are explicit:

-

Your data is NOT used to train Palantir's models.

-

LLMs used in AIP do NOT retain your data for training.

According to their AIP Security FAQ, Palantir secures strict contractual and technical guarantees from model providers. No customer data submitted in prompts is used for model training. This allows us to build with confidence, knowing our intellectual property remains ours and isn't inadvertently teaching a competitor's AI how we do business.

5. Maintenance: Keeping the Lights On

Finally, building a pipeline is easy; keeping it running is hard. The platform includes built-in tools that replace entire separate SaaS products:

-

Scheduling: Integrated directly into the pipeline graph. You can trigger builds based on time (cron) or events (when new data arrives), ensuring your downstream applications are always fresh.

-

Branching: You can branch your data and code just like Git. You can test a new pipeline logic on a "dev" branch of the data without corrupting the production ontology. This enables a safe CI/CD workflow for data engineering that is rarely seen in other tools.

-

Data Expectations: Built-in health checks (e.g., "Warning if 'price' is negative" or "Error if 'ID' is null") stop bad data from propagating to your agents. This proactive quality assurance prevents the "garbage in, garbage out" problem that plagues many AI projects.

Conclusion

The shift to Palantir isn't just about "better tools"; it's about moving up the stack. By abstracting away the low-level data engineering and providing a secure, semantic layer for AI to interact with, we can build GenAI applications in days that used to take months.

If you are still writing glue code to move JSON files between S3 buckets, it’s time to look at the Ontology.

For more technical details, check out the Palantir Learning Tracks.

Related posts

Artificial Intelligence

Generative AI

Reimagining First-Level Candidate Screening: How Systango Partnered with WhoHire to Accelerate AI-Led Innovation

03 Feb 2026

Generative AI

Artificial Intelligence

Growth and Digital Strategy

UK’s Generative AI Investment Surge: Big Tech Bets And Why Execution Matters

01 Oct 2025

Let’s talk, no strings attached.