Building a Chat Service Using Micro-services Architecture

Published on 26 Nov 2018

Building a Chat Service Using Micro-services Architecture

On having a chat service we often end-up with a complex implementation, heavy efforts on building and testing, and thus, we put substantial efforts on the chat service which could rather be spent on the application which is using the service. Or, we end up using the 3rd party applications that always gives a hard time in customisation.

What made us build a new Chat Service using Micro-service Architecture?

- We wanted to build a service which could be easily integrated within the applications.

- Different applications should be supported form single deployed service.

- The service should support a large amount of traffic, delivering the data in real-time with data integrity.

- We wanted to store all the data on the premises for security reasons.

- The service should be lightweight, cost-effective which could be easily scaled up or down depending on the traffic.

- Service should support nearly all the features that all the modern chat service providers like WhatsApp, Skype, Facebook message, etc.

- Service should support real-time multiplayer games.

- The service should easily be customized to support different features for the different application.

Technology Stack

- NodeJS (v8.11.0 LTS): an event-driven, non-blocking I/O model that makes it lightweight and efficient, perfect for data-intensive real-time applications that run across distributed devices.

- Socket.io (v2.1.1): enables real-time, bidirectional and event-based communication. It works on every platform, browser or device, focusing equally on reliability and speed.

- Redis (v4.0): in-memory data structure store, used as a database, cache and message broker.

- Sequelize (v 4.41.2): a promise-based ORM for Node.js. It supports the dialects PostgreSQL, MySQL, SQLite, and MSSQL and features solid transaction support, relations, read replication and more.

- Docker (v18.09): an open platform for developers and sysadmins to build, ship, manage, secure and run distributed applications, whether on laptops, data centre VMs, or the cloud without the fear of technology or infrastructure lock-in.

- EC2 Instance: provides different instance types to enable you to choose the CPU, memory, storage, and networking capacity that you need to run your applications.

- ElastiCache: offers fully managed Redis and Memcached. Seamlessly deploy, run, and scale popular open source compatible in-memory data stores. Build data-intensive apps or improve the performance of your existing apps by retrieving data from high throughput and low latency in-memory data stores.

- Application Load Balancer: serves as the single point of contact for clients. The load balancer distributes incoming application traffic across multiple targets, such as EC2 instances, in multiple Availability Zones. This increases the availability of your application.

- Amazon Relational Database Service (Amazon RDS): makes it easy to set up, operate, and scale a relational database in the cloud. It provides cost-efficient and resizable capacity while automating time-consuming administration tasks such as hardware provisioning, database setup, patching and backups.

Architecture

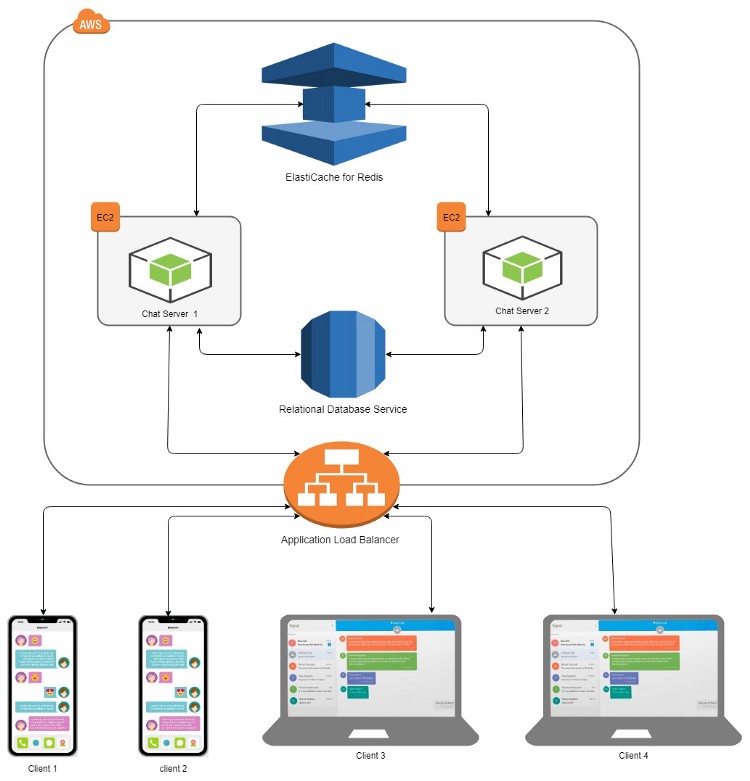

After lots of research and studying the architecture of Applications like WhatsApp and Facebook, we came up with the following Architecture-

Architecture Diagram

Components in the Architecture:

- Clients: Any clients supporting Socket.io clients.

- EC2 Instances: Used for the deployment of the Chat service, irrespective to any operating system since docker is used. Each chat service installed on the instance is completely independent of the other instances.

- AWS Application Load Balancer: It is a single point where all the clients get connected with the sticky session. ALB distributes the load to the EC2 instances and redirects the load if any EC2 instance fails to respond.

- RDS(Relational Database Service): Any relational database can be used to persist chat related data. Sequelize as an ORM help to use any relational database.

- ElastiCache: Used as the Redis for the in-memory cache and message broker service. Message broker service is used to transfer data between two or more Chat services.

Message Delivery

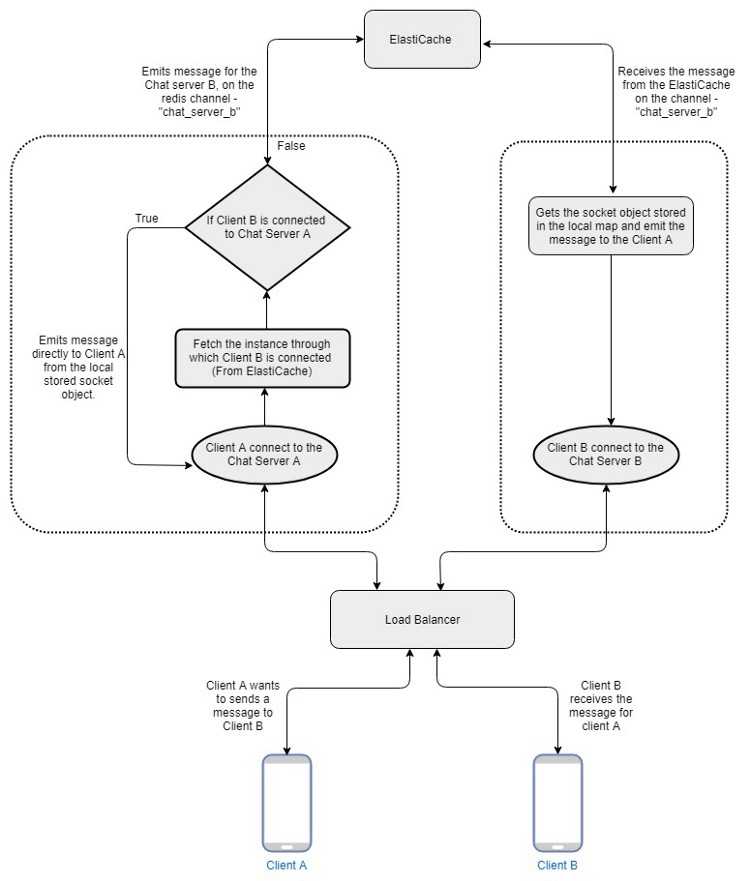

Data Flow Diagram

From the above flow, we were able to achieve real-time messaging using each component efficiently. For example, not each and every message needs to be published on the Redis. If the recipient of the message is connected to the same chat server, there is no need to emit it to the all the servers. The message could be directly sent to the respective clients.

Data store on ElastiCache

Using the ElastiCache we could avoid database queries. ElastiCache provides high throughput and low latency in-memory data stores. On the ElastiCache we are maintaining two maps Users and Groups

When a client gets connected to the Chat server the User map is updated, having the entry of the username, public name, server’s name, and status. In this map, we store client’s minimal information which needs to be fetched frequently.

In the Group Map, we store all the group with their members. While sending a message to a group, we could fetch the recipient of the messages.

Database Store

We are storing data in the database in an asynchronous way, avoiding any delay in the message delivery.

- Peer Conversation: For every new peer, when connected, a new entry is made on the storage. All the messages sent between two users would have the same conversation id.

- Messages: The messages which need to be persisted are stored here. It is common to the peer conversation and group conversation.

- Pending: All the messages links/ids are stored here, irrespective to the type of conversation. When a user comes online, all the messages from this storage is delivered to the user and then removed from it.

- Group Conversation: Stores group related information and has a relationship with the Messages and Group members.

- Group Members: Stores members of the group and their roles in the group.

Pros of the Architecture

- Lightweight and supports real-time message delivery by using Node and Socket.io.

- Easy integration with the other applications, as a micro-service.

- Depending on the load we can easily scale up or down service.

- Efficient use of the resources, to provide high throughput. For example, we are not publishing each any every message to the Redis. Also, we are storing data in the database in an async way to avoid any delay.

- Customization is easy, we can easily plug-in or plug-out features depending on the requirement.

- Deployment is easy, we do not need in-depth knowledge about the deployment processes.

- Ready to use for one-to-one chat, group chat, online gameplay etc.

- Automatic re-connection of sockets on network drop.

- By default, media sharing is enabled.

Cons of the Architecture

- Dealing with the sockets, poor network always gives a hard-time to ensure packet delivery. So we have to write some extra code on the client end to detect poor network in order to avoid message drop.

- The message storage could grow very large over the time, to avoid this we have to archive the messages and store them on some different location like S3 buckets.

Further scope

- Feature to upload media files, using the sockets and upload the files to S3 buckets. Right now the files are directly being uploaded to the S3 buckets and URL is being shared using sockets.

- Better handle network drops.

- Provide different rotational encryption policies for the messages. Keys should be automatically updated.

- Handle message archiving and storing it to the S3 buckets, say achieving the messages older than 6 months to S3 buckets.

Wrapping up

Through this solution, we were able to create a simple, light-weight, scalable and customizable chat micro-service application. The architecture was able to support features like one-to-one chat, group chat, media transfer, online gameplay, etc. Also, the architecture enables different applications to work on a single deployed service, having low cost in terms of computation and resources. The cost could further be decreased depending on the load and uses, like instead of ElastiCache, Redis could be used, etc.

For further information, feel free to shoot mails at engineering@systango.com. Our team would love to help you with your doubts and to shape robust and striking web & mobile apps. We can be contacted here!

Related posts

Blockchain

Web Apps

NFT

App Development

The Ultimate Guide to Hiring Blockchain Developers in 2025: Skills, Costs, and Models

15 Apr 2025

App Development

Mobile Apps

Technology

Web Apps

Building Your App? Rust vs Go: A Guide for Business Owners

21 Feb 2024

Let’s talk, no strings attached.